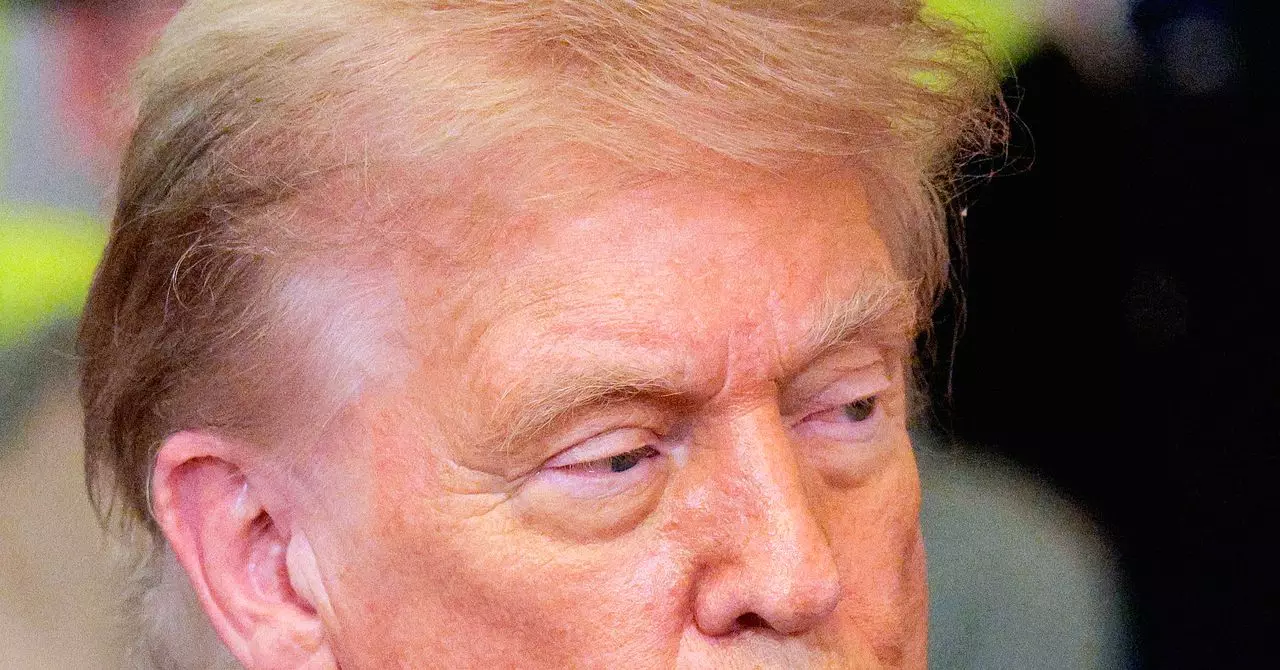

In the whirlwind of congressional negotiations over President Donald Trump’s so-called “Big Beautiful Bill,” one clause has ignited fierce controversy: the AI moratorium provision. Originally designed by White House AI czar David Sacks to impose a ten-year halt on state AI regulations, the measure has stumbled badly in the face of bipartisan opposition. Rather than presenting a clear path forward, the provision’s multiple revisions—moving from a decade-long pause to a diluted five-year moratorium with numerous exceptions—reveal a legislative process muddled by conflicting interests and political maneuvering.

The moratorium isn’t merely a bureaucratic detail; it is a critical battleground where lawmakers grapple with balancing innovation, state sovereignty, and public safety. Senator Marsha Blackburn’s vacillation epitomizes this tension. Initially opposing the moratorium, then collaborating on a weakened five-year version with Senator Ted Cruz, Blackburn ultimately rejected that compromise, arguing it would leave children and other vulnerable groups exposed to harm. This flip-flopping reflects both the complexity of regulating advanced technologies and the pressure politicians face from powerful industry stakeholders like Big Tech, as well as local constituencies invested in protective measures, like Tennessee’s music industry.

Why the Five-Year Moratorium Falls Short

The five-year revision attempts to thread the needle by carving out exceptions for certain state laws, such as those protecting against AI deepfakes, child safety, or deceptive commercial practices. While these exemptions might look promising at first glance, they are undercut by a troubling catch: any state legislation can be invalidated if it places an “undue or disproportionate burden” on AI or automated decision systems. This vague language effectively erects a legal shield for AI developers, giving companies a powerful tool to challenge and potentially block regulations aimed at protecting society.

Such language is not a minor detail—it fundamentally alters the landscape of accountability. Senator Maria Cantwell’s critique that this provision creates a “brand-new shield” against regulation is well founded. By prioritizing the economic interests of tech giants under the guise of encouraging innovation, the moratorium subverts the hard-earned power of states to safeguard their citizens. This is especially dangerous as AI systems increasingly penetrate everyday life—from social media algorithms influencing public discourse to automated tools affecting employment and criminal justice.

The Real-World Implications for Public Safety and Rights

The stakes extend far beyond legislative tussles. Groups like Common Sense Media warn that the moratorium’s expansive scope could derail nearly all efforts to regulate tech in the name of safety and consumer protection. This is a sobering reality amidst ongoing concerns over harmful AI content, privacy invasions, and the exploitation of marginalized communities—including children and political minorities.

Take, for example, Blackburn’s home state of Tennessee, which recently legislated against AI-generated deepfake impersonations of music artists—a nuanced effort to protect artists’ rights and reputations. Although some movement towards exemptions exists, the moratorium’s overarching anti-regulatory stance risks nullifying such targeted laws under the pretense of “undue burden.” The provision thus threatens to hand Big Tech a “get-out-of-jail free card,” undermining grassroots and state-level initiatives designed to increase transparency and safety in a high-stakes, fast-moving field.

The Broader Challenge of Regulating AI in a Fragmented System

This moratorium controversy highlights a deeper problem in the U.S. regulatory framework: the tug-of-war between federal uniformity and state innovation. While there is merit in seeking consistent rules to support industry growth, blanket moratoria typically serve corporate interests more than the public’s. If allowed to stand, this sort of federal pause may stunt necessary experimentation with state regulations that could better address the unique risks of AI.

Moreover, the political drama—illustrated by the rapid reversals and cross-party pushback—signals that lawmakers have yet to align on a clear, principled approach to AI governance. The danger lies not only in inadequate oversight but in eroding trust in institutions meant to safeguard democratic values. As AI technologies grow in power and scope, effective regulation will require lawmakers to embrace complexity rather than settle for easy compromises that leave citizens exposed.

Ultimately, protecting society from AI-related harms demands an honest reckoning with the competing priorities of innovation, safety, and democratic accountability. Half-baked moratoriums with loopholes will only heighten risks, delaying the crucial work of crafting thoughtful, enforceable safeguards for the AI era.