The advent of sophisticated embedding models has revolutionized how machines understand and manipulate human language and data. Among these technological milestones, Google’s Gemini Embedding model stands out as a game-changer. Its recent shift to general availability signifies not just a technological upgrade but a strategic leap that could redefine industry standards. Through its superior performance on the renowned Massive Text Embedding Benchmark (MTEB), Gemini establishes itself as the forefront runner in a fiercely competitive landscape. However, this development is not merely a victory for Google; it prompts a fundamental reconsideration for enterprises weighing their AI infrastructure options.

The core strength of embeddings lies in their ability to transform complex data—text, images, or video—into a structured numerical format capturing their semantic essence. This capability permits applications that transcend simple keyword searches, enabling sophisticated retrieval systems, intelligent question-answering, and deeply contextual content analysis. As such, embedding models are increasingly becoming the backbone of enterprise AI, powering everything from customer service bots to advanced content recommendations. The introduction of Google’s Gemini model, with its cutting-edge architecture and unmatched versatility, greatly accelerates this trend, offering a powerful tool that can adapt seamlessly across diverse domains.

Google’s Gemini Embeddings: A Versatile and Cost-Effective Solution

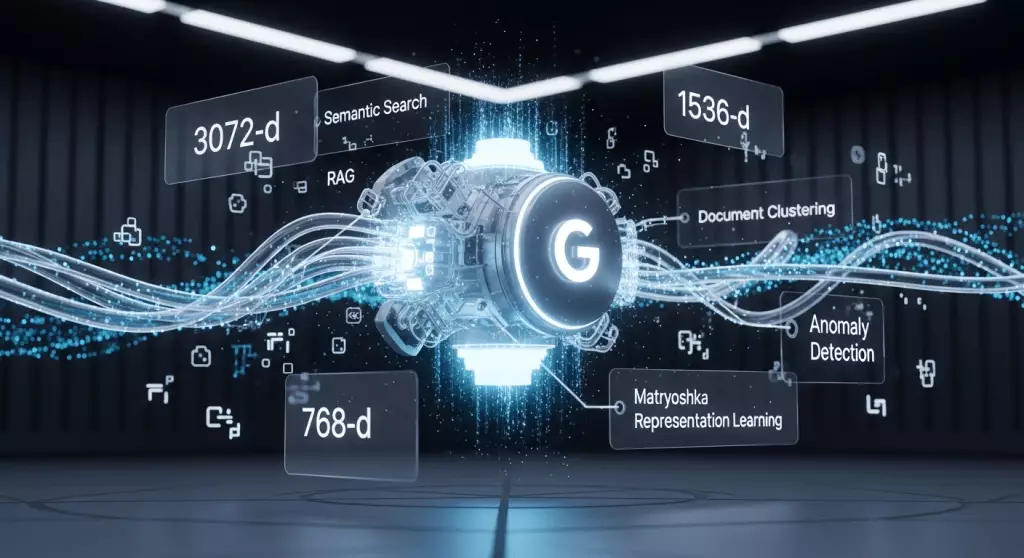

What makes Gemini truly noteworthy is not just its performance but its inherent design flexibility. Built upon the innovative Matryoshka Representation Learning (MRL) technique, the model provides users with the ability to generate embeddings of varying sizes—such as 3072, 1536, or 768 dimensions—while preserving semantic richness. This adaptability is essential for enterprises seeking to optimize their systems for speed, storage, and accuracy. Smaller embeddings can accelerate real-time applications, conserve resources, and reduce costs, all without sacrificing essential information.

Furthermore, Google emphasizes Gemini’s suitability as a general-purpose, out-of-the-box solution. Its readiness across industries such as finance, law, engineering, and more reflects a strategic intent to simplify AI deployment in diverse environments. Its supporting infrastructure—namely the Gemini API and integration with Vertex AI—further streamline adoption, ensuring that organizations can leverage its capabilities without the need for extensive fine-tuning or domain-specific retraining.

Cost-wise, Gemini also positions itself attractively, priced at a competitive rate of $0.15 per million input tokens. This affordability broadens access to high-quality embeddings for a wide range of companies, from startups to large enterprises. Additionally, supporting over 100 languages, Gemini is evidently designed for a global user base, emphasizing inclusivity and versatility.

The Competitive Landscape: Balancing Power, Flexibility, and Control

While Gemini’s debut is impressive, the market for embedding models remains highly competitive, with notable players vying for dominance. OpenAI’s models, for instance, continue to be widely adopted due to their proven performance and ease of integration. However, open-source alternatives such as Alibaba’s Qwen3-Embedding push the boundaries further, promising comparable performance under permissive licenses like Apache 2.0. These open models threaten to erode proprietary advantages by offering cost-effective, customizable options that can be fine-tuned to niche applications.

Specialized models like Mistral and Qodo-Embed-1-1.5B also exemplify a shift toward domain-specific performance. For example, Mistral’s focus on code retrieval gives developers tailored tools that outperform generalist models in specific tasks. Similarly, Cohere’s Embed 4 addresses the needs of enterprise environments rich with noisy, unstructured data—such as messy documents, scanned texts, or unstandardized formats—by providing robustness in real-world scenarios.

This diversity highlights a crucial decision point for organizations: Should they prioritize top-ranked proprietary models like Gemini for ease and broad applicability, or should they adopt open-source, customizable solutions for greater control and potential cost savings? For businesses with rigorous data sovereignty requirements or those seeking fully self-hosted models, open-source alternatives become particularly appealing. The choice hinges on strategic priorities—speed of deployment versus autonomy.

Strategic Implications for the Future of AI Adoption

The evolution of embedding models exemplifies a broader shift in AI development toward democratization and specialization. Google’s Gemini, as a leading generalist, sets a high-performance standard that many will aspire to match or surpass. Nevertheless, the emergence of open-source competitors underscores an ongoing tension: flexibility and control versus convenience and speed.

Enterprises adopting Gemini must weigh its benefits—such as seamless integration into Google Cloud and mature deployment pipelines—against potential limitations like dependency on API access and data privacy concerns. Conversely, opting for open-source solutions like Qwen3-Embedding offers advantages in customization and sovereignty but often involves more complex setup and maintenance.

In the broader context, this competitive dynamic accelerates innovation, pushing providers to develop more nuanced, capable models. It also compels organizations to reevaluate their AI strategies, balancing the allure of cutting-edge performance with long-term control and cost considerations. The winners will be those who can effectively navigate this landscape, leveraging the strengths of both proprietary and open-source solutions to craft AI systems that are not only powerful but also adaptable to evolving needs.

Ultimately, the emergence of Google’s Gemini as a top-tier embedding model marks a pivotal moment—one that underscores the importance of strategic choice in AI investments. As the field advances rapidly, staying critical, adaptable, and forward-thinking will remain essential for organizations eager to harness the true potential of AI embeddings.