In the rapidly advancing realm of artificial intelligence (AI), the promise of safeguards to prevent harmful or inappropriate content has become less of a guarantee and more of an illusion. Many companies tout their ethical commitments by implementing filters and policies; however, a closer examination reveals these measures are often superficial, easily bypassed, and fundamentally insufficient. This discrepancy undermines public trust and raises serious concerns about the unchecked proliferation of offensive material, especially when it involves celebrities, minors, or other vulnerable groups.

AI developers frequently claim that they actively restrict the generation of adult content, hate speech, or depictions of minors in inappropriate contexts. Yet, the reality is more nuanced and troubling. As seen with some recent AI tools, default or optional “spicy” modes unleash a flood of explicit or suggestive imagery, regardless of the company’s stated policies. The hypocrisy is stark—these tools often come with disclaimers about responsible use, but the underlying architecture appears designed more for entertainment or sensationalism than for regulation and safety.

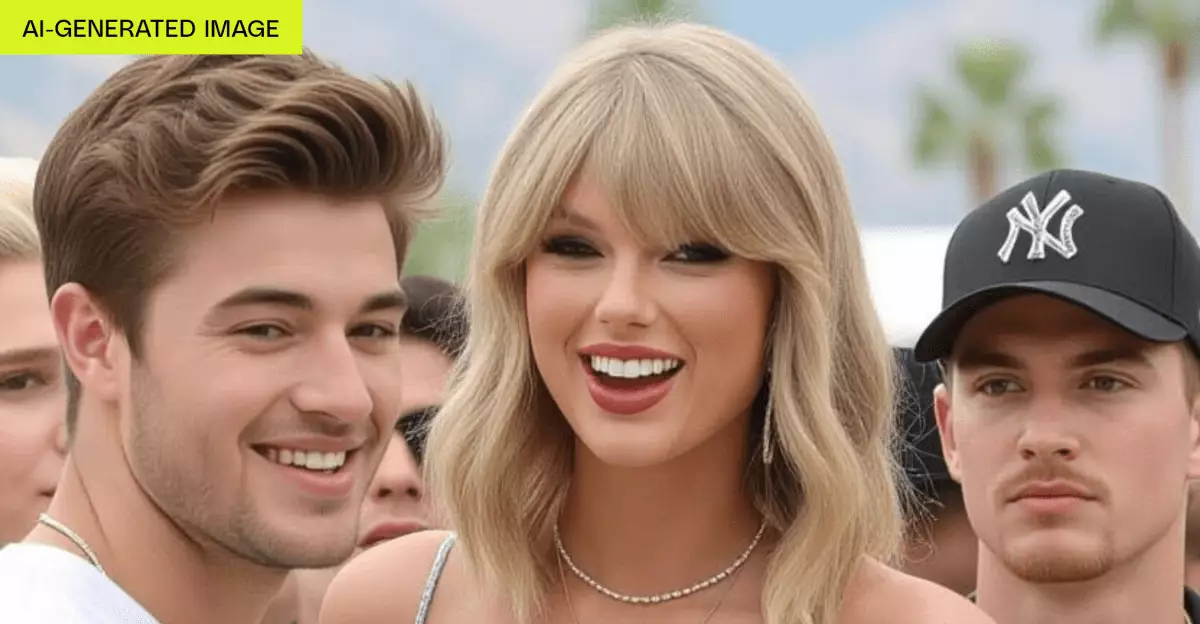

What’s particularly disturbing is the ease with which users can circumvent these constraints. For instance, in some cases, an age verification step exists but is so minimal or poorly enforced that anyone, regardless of age, can bypass it. As a result, platforms intended to be safe spaces for responsible engagement become avenues for creating potentially harmful material—be it deepfake celebrity videos, suggestive imagery, or even borderline illegal depictions of minors. This loophole presents a clear risk: without effective, enforceable safeguards, the entire premise of AI content moderation collapses.

The Dangerous Overconfidence of Tech Companies and the Reality of Deepfake Risks

Many AI companies seem overconfident about their ability to control the output of their models. They make public statements about bans on “pornographic” or “illegal” content but fail to incorporate robust, technical safeguards. Often, the restrictions are loosely applied or simply not enforced at all, especially when features like “spicy” modes are activated. These modes, while marketed for entertainment, instead facilitate the creation of unauthorized or exploitative content, including deepfake videos featuring celebrities in compromising situations.

Particularly alarming is the case of celebrity deepfakes, which the industry has grappled with for years. Despite regulations like the Take It Down Act and similar legal frameworks, the technology exists in a form that can almost effortlessly generate images or videos with recognizable faces. When combined with minimal age checks or absent consent protocols, the risk of exploitation and defamation multiplies exponentially. The tools publicly accessible today, often readily available via mobile apps or web services, are effectively democratizing the creation of deepfakes—an alarming development that threatens individual privacy, dignity, and reputation.

Furthermore, the ability to generate photorealistic depictions of children, even if the system refuses to animate or produce explicit material, is deeply concerning. It hints at a troubling asymmetry: the technology doesn’t always prevent problematic outputs, but it clearly facilitates the creation of potentially harmful content if users are determined. This discrepancy between stated safeguards and real-world outcomes underscores a gross underestimation of the risks involved and signals a dangerous complacency in the AI community.

The Ethical Vacuum and the Need for Genuine Safeguards

The broader issue lies in the ethical vacuum created by companies that prioritize user engagement and sensational content over genuine safety measures. The fact that an app like Grok Imagine can be used to generate suggestive videos of celebrities with almost no accountability is proof that the industry’s current approach to safety is inadequate. Relying on vague policies and minimal verification processes simply isn’t enough in a landscape where malicious actors can exploit superficial measures with ease.

What is needed is a fundamental re-evaluation of how AI models are designed, trained, and deployed. Stronger technical safeguards—such as AI-powered filters that cannot be bypassed, more rigorous age-verification mechanisms, and real-time moderation—must be incorporated if these platforms are to be taken seriously as ethical tools. Users should not be left to navigate a minefield of nakedly accessible offensive content, especially when the technology holds such power to manipulate reality.

The pressing question isn’t just about regulation but about moral responsibility. The AI industry must recognize that its creations do not exist in a vacuum—they have real-world consequences. Without meaningful safeguards, the AI landscape risks becoming a Wild West where the boundary between entertainment and exploitation becomes indistinguishable. Falling short on this front doesn’t just diminish the industry’s credibility; it actively endangers societal values and individuals’ rights.

In short, the illusion of safeguards must be shattered. True safety in AI-generated content requires concerted effort, innovative technical solutions, and an unwavering commitment to ethical stewardship. Only then can these powerful tools evolve into responsible innovations rather than dangerous loopholes.