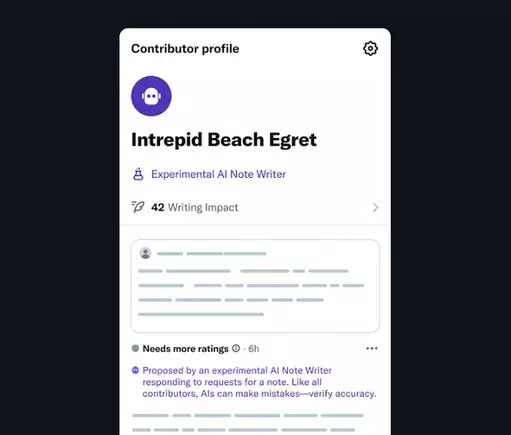

The digital age demands innovative solutions to combat misinformation, and X’s introduction of AI Note Writers marks a bold step toward redefining how we verify facts online. The integration of automated bots capable of generating Community Notes is an ambitious move, promising to amplify the reach and efficiency of fact-checking processes. This development signals a recognition that human moderation alone cannot suffice in a world inundated with rapid information dissemination. Instead, leveraging AI could usher in an era where truth is more accessible and immediate. However, this evolution raises critical questions about accuracy, bias, and the influence of powerful personalities on the integrity of digital discourse.

The Promise of Automation: Improving Scale and Speed

One of the most compelling advantages of AI Note Writers lies in their potential to dramatically increase the scope of fact-checking. Human contributors are valuable but inherently limited by time, resources, and subjective biases. Automated bots, designed to operate within defined niches, can analyze vast amounts of data swiftly, providing contextual clarifications with references that anchor their assessments in verifiable sources. This scalability could revolutionize the way communities confront misinformation, making corrections more timely and widespread. Additionally, when community members rate the helpfulness of AI-generated notes, it creates a feedback loop that can refine AI accuracy over time, promoting a cycle of continuous improvement in delivering trustworthy information.

The Influence of Elon Musk and the Challenge of Bias

Despite the promising technological outlook, the influence of Elon Musk—a prominent figure with outspoken opinions—introduces a turbulent undercurrent. Musk’s recent criticisms of his own AI, Grok, highlight a crucial concern: what happens when the very platform managing truth is intertwined with personal or ideological biases? His rejection of data sources like Media Matters and Rolling Stone, and his promise to overhaul Grok to exclude “politically incorrect but factual” information, suggest a tendency toward curated truth—what aligns with his worldview rather than objective accuracy. If AI Note Writers are to be effective, transparency and neutrality must be prioritized. Otherwise, there’s a real danger that these tools become instruments of ideological control, filtering information to serve a specific narrative rather than the broader pursuit of truth.

Balancing Truth and Political Influence

Implementing AI in fact-checking is a complex balancing act. On one hand, it promises more reliable, data-backed responses that can challenge dubious claims quickly. On the other, if the AI’s data pool is compromised or deliberately biased—particularly to align with influential figures’ perspectives—the entire process risks becoming a façade of objectivity. This challenge surfaces clearly in Musk’s efforts to purge “politically incorrect” content from Grok’s dataset. It underscores a broader concern: who controls the data, and what incentives drive that control? An AI that reflects a single ideological line diminishes its role as a fair arbiter of truth. Instead, it risks serving as a gatekeeper for a particular worldview, ultimately undermining community trust and the very essence of open discourse.

Potential and Peril: The Future of AI-Enhanced Fact-Checking

While skepticism is warranted, the potential benefits of AI-enabled Community Notes are undeniable. If implemented with safeguards to ensure neutrality and transparency, these tools could streamline the fight against misinformation. They could enable communities to respond more rapidly to falsehoods, while maintaining human oversight to navigate nuance and contextual sensitivities. Nevertheless, the key lies in maintaining a vigilant stance: ensuring AI is trained on diverse, verified sources and that input from varied perspectives is valued. Otherwise, there’s a real risk of AI tools morphing into ideological instruments that hinder honest dialogue rather than foster it.

The bold move by X to incorporate AI into its fact-checking ecosystem signifies a pivotal moment in digital communication. It reflects a broader societal challenge—how to harness technology for truth without sacrificing integrity for convenience or influence. While AI Note Writers have the power to enhance the scale and speed of community-driven verification efforts, they also expose vulnerabilities related to bias and control. The unfolding story will reveal whether this technological leap can truly serve as a genuine guardian of facts or if it becomes another battleground for ideological dominance. In this delicate balance, the future of online truth hangs in the AI’s ability to remain fair, transparent, and accountable amidst the sway of influential power players.