In recent years, discussions surrounding facial recognition technology have been at the forefront of the privacy debate, and now Meta is making headlines yet again. The tech giant is currently testing new security processes employing facial recognition to combat fraud, particularly in the form of “celeb-bait,” while attempting to navigate the murky waters of public skepticism and privacy concerns that have plagued the company in the past. Through analyzing these developments, we can uncover both the potential advantages and notable pitfalls of such measures.

Celeb-bait scams take advantage of the public’s fascination with celebrities by misleading users into clicking on ads featuring images of well-known public figures. Scammers often use these images to draw consumers into fraudulent schemes, leading them to malicious websites. In response, Meta is piloting a facial matching process that strives to identify these deceptive practices by comparing faces featured in ads to those of verified public figures on Facebook and Instagram. If a match is found, the company aims to confirm the ad’s legitimacy and can subsequently block the scam if it is not validated as genuine.

This proactive stance by Meta demonstrates a commitment to safeguarding users while leveraging the technology to counteract more malicious intents. Still, it raises questions about the usage of facial data, particularly as it relates to privacy protections and the ethical implications of storing or processing images.

Despite Meta’s current attempts to embrace facial recognition for security reasons, the company faces a history riddled with backlash regarding data privacy. In 2021, Meta shelved its facial recognition processes amid global scrutiny of how data could be misused, especially given existing reports in places like China, where facial recognition has been employed for unwarranted surveillance and tracking of marginalized groups. Such precedents highlight the very real risks that accompany the rollout of facial recognition technology and the potential for misuse if data security measures are not thoroughly enacted.

Moreover, many civil liberties advocates view facial recognition as a double-edged sword, arguing that the accuracy of facial recognition systems is often flawed when identifying people of color or individuals from marginalized communities. These concerns illustrate the challenges Meta faces as it reinstates certain aspects of the technology while balancing the need for security against the cries for accountability and responsible usage.

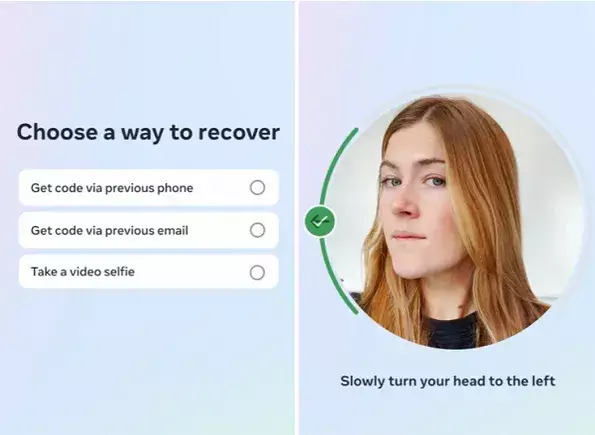

Another facet of Meta’s re-engagement with facial recognition lies in the testing of video selfies as a verification tool for account recovery. Users will be required to upload a video selfie, which will then be cross-referenced with existing profile pictures to confirm identity. This method mimics similar identity verification protocols commonly seen in mobile devices, showing that Meta is attempting to introduce user-friendly features while ensuring security during potentially vulnerable points of interaction.

To alleviate concerns regarding the storage of facial data, Meta emphasizes that all video selfies will be encrypted and securely deleted after a one-time comparison. However, as history has demonstrated, the trust surrounding data protection can be tenuous, leading to uncertainty about how users will react to this new method of verification.

While facial recognition technology presents a robust avenue for enhancing security protocols, its implementation by a company like Meta invites a plethora of questions regarding privacy and ethical use. The apparent dichotomy between protecting users and safeguarding their privacy illustrates the complexities of deploying such technology in practice. Although facial recognition can indeed serve as an effective deterrent against fraud, the very concern over systemic misuse looms large.

The emergence of these facial recognition features may compel Meta to clarify its protocols surrounding data retention and transparency. Understanding how and why facial recognition systems collect, analyze, or store biometric data is critical in cultivating user trust. With the ongoing debate surrounding the ethical implications of facial recognition technology, the time is ripe for rigorous discourse on the future of such systems in social media environments.

As Meta navigates the shifting landscape of facial recognition, it is essential for the company to emphasize user security while remaining cautious of the broader implications of its actions. The potential for enhanced security measures stands in contrast to the ethical dilemmas that arise with the use of biometric data. The balance struck will define not only Meta’s approach to technology but also its relationship with users and stakeholders in an increasingly scrutinized digital landscape.

As Meta embarks on this renewed journey with facial recognition, it must proceed with an unwavering focus on transparency, ethical usage, and user engagement to avoid repeating past mistakes and to construct a robust framework for future implementations.