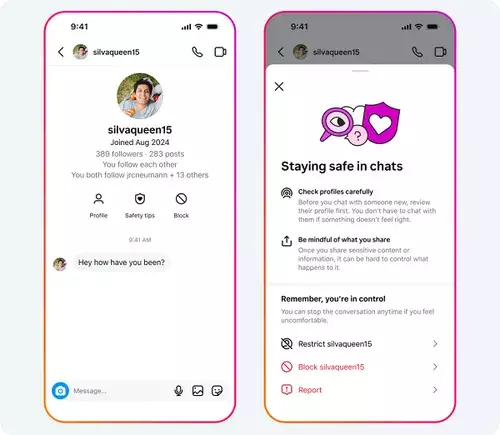

Meta’s latest enhancements signal a commendable, albeit complex, effort to prioritize adolescent safety in an increasingly perilous digital landscape. The company’s introduction of “Safety Tips” prompts within Instagram chats exemplifies a recognition that young users need proactive guidance amid the often murky waters of online interaction. By offering direct links on how to spot scams and interact responsibly, Meta aims to foster a sense of empowerment among teens—yet, the question remains: will such superficial nudges be enough to counteract the deeply ingrained risks they face?

More notably, the new quick blocking features embedded in direct messages enable teens to act swiftly against potential threats. The added context about account creation age is a subtle but significant move to inform users about the credibility of their contacts. This layer of transparency could discourage predatory behavior or, at the very least, make young users more conscious during interactions. Furthermore, consolidating the block and report functions streamlines the response process—crucial in moments of distress, when hesitation could be expensive.

Yet, while these features seem user-centric on the surface, they also hint at a deeper acknowledgment: teens are navigating a crowded, often toxic digital environment. Simplified tools are essential, but they must be part of a broader, more aggressive strategy that actively monitors and counteracts harmful actors. Meta’s focus on ease of use for reporting indicates an understanding of how overwhelmed users can be, but it also underscores the ongoing dependence on individual users to take action rather than the platform proactively identifying and removing threats.

Guarding Against Vulnerability and Exploitation

Meta’s efforts aren’t confined to reactive safety measures. The platform demonstrates a proactive stance by excluding teen accounts from recommendation algorithms targeting suspicious adults. This step aims to mitigate one of the most insidious avenues of exploitation—predators who exploit social media’s vast reach to target minors. Evidence of past failures, such as the removal of hundreds of thousands of accounts with sexualized content or requests, reinforces the importance of such preventive measures.

However, these numbers also serve as a grim reminder of the scale of the problem. The existence of nearly 135,000 accounts involved in sexual exploitation of minors on Instagram alone illustrates that technological safeguards are still catching up to predators’ ingenuity. Meta’s transparency in releasing these figures is a double-edged sword: it signifies accountability but also highlights the magnitude of the challenge at hand.

Additionally, expanding protective features like nudity filters, location disclosures, and message restrictions demonstrates a layered approach designed to diminish minors’ exposure to danger. While these measures appear robust, a skeptic might argue that the persistence of problematic content indicates that technology alone cannot solve the deeper societal issues underlying online abuse. These updates, though valuable, risk becoming token gestures if not backed by continuous oversight, rapid response teams, and user education.

The Broader Context: Demanding Stronger Regulations and Ethical Leadership

Meta’s support for raising the legal age for social media access across the European Union reveals an awareness that structural change is necessary for meaningful safety. By endorsing policies that restrict access until users reach a certain age, Meta aligns itself with regulatory efforts—yet, beneath this political maneuvering lies a strategic desire to shape the narrative around user safety.

The move to advocate for a more uniform age limit (potentially raising it to 16) can be viewed as both a moral stance and a strategic repositioning. It signals that Meta understands the vulnerabilities of younger teens and recognizes that platform safety is not solely a technological challenge but also one rooted in legal and societal frameworks. However, the practicality of enforcing such age restrictions merits skepticism; age verification remains notoriously unreliable, and the risk of underage access persists.

While Meta’s safety features are laudable, they raise critical questions about platform accountability. Do these measures genuinely protect users or merely serve to insulate Meta from criticism? Is the company investing enough resources in combating misuse, or are these efforts cosmetic patches on a fundamentally flawed system? The urgency of these concerns underscores that technological safeguards, no matter how sophisticated, must be integrated into a broader ecosystem of regulation, education, and cultural change.

Ultimately, Meta’s evolving approach suggests a desire to maintain relevance and consumer trust amid mounting criticism and regulatory pressures. Let’s be clear: user safety isn’t just an ethically driven priority; it’s a strategic imperative for the future of social media. However, whether these new policies will result in a safer environment for teens hinges on their implementation’s sincerity and the platform’s willingness to confront the darker realities lurking beneath its shiny interface.