The world of artificial intelligence and natural language processing is witnessing groundbreaking innovations in collaborative models. One significant advancement is Co-LLM, an algorithm developed by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). As language models become increasingly essential in various applications, understanding how these systems can work together more efficiently poses a compelling area of research. Co-LLM aims to refine the interaction between general-purpose language models and specialized models, enhancing the accuracy and efficiency of responses.

Artificial intelligence language models, particularly large language models (LLMs), have transformed the way computers understand and generate human language. However, a critical limitation of these models is their inability to determine when additional expertise might enhance the quality of their output. For example, when asked to provide specific information—such as details about medical conditions or mathematical solutions—general-purpose LLMs can falter due to their broad but shallow understanding of specialized topics.

Traditionally, improving accuracy in LLMs has relied on extensive labeled data or complex algorithms, making the process cumbersome and less flexible. This brings forth the question: how can we train models to recognize when they need to “phone a friend”? The MIT researchers recognized the importance of a more organic approach and developed Co-LLM to bridge this gap effectively.

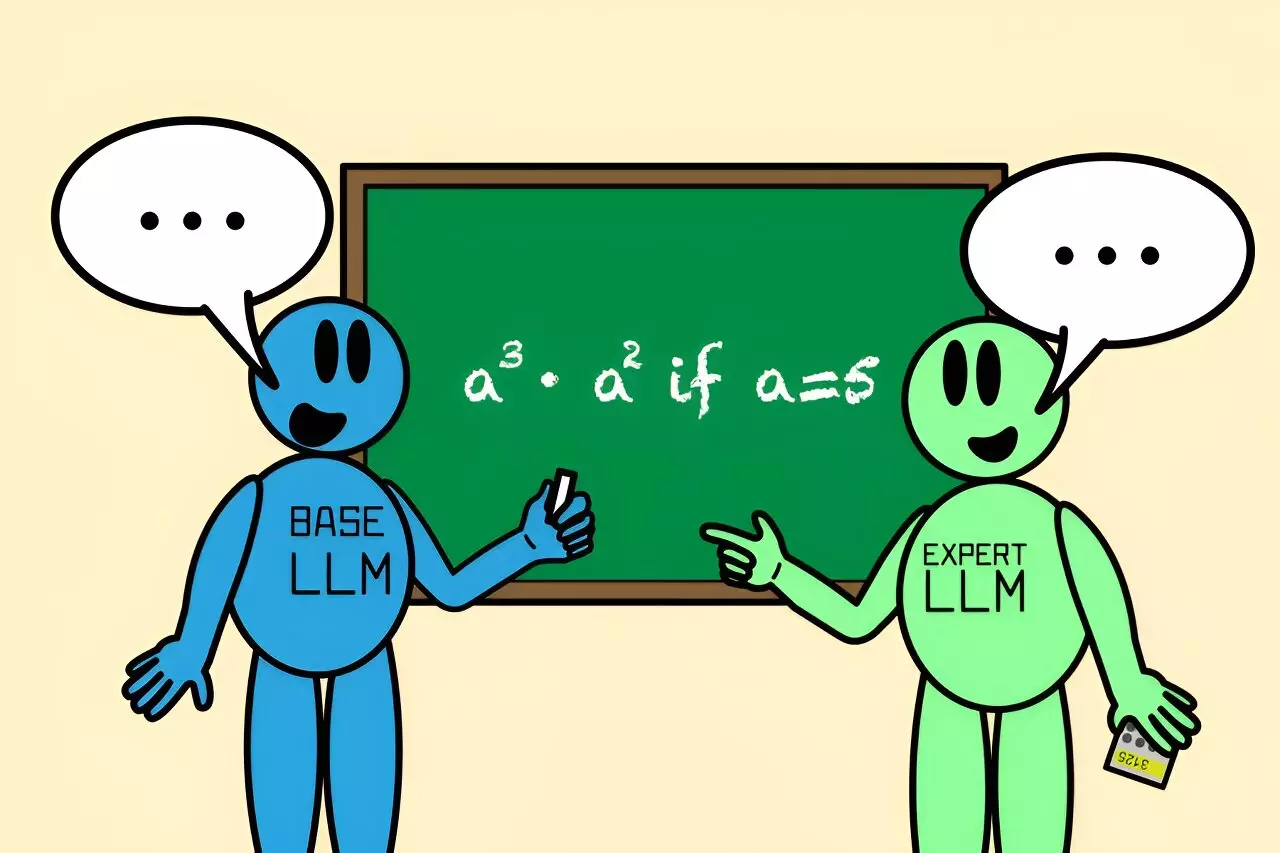

At the heart of Co-LLM is a mechanism that enables a general-purpose LLM to compete alongside a specialized model, working in tandem to produce more recent and accurate responses. The algorithm’s core innovation lies in its use of a “switch variable,” which functions similarly to a project manager. This variable evaluates the competence of each word generated by the general model and decides when to integrate input from the specialized model.

For instance, when Co-LLM is prompted to provide examples of extinct bear species, the general-purpose model generates an initial response. The switch variable continually reviews this output for opportunities to enhance it with more precise details or tokens from the expert model. Such collaboration allows Co-LLM to create responses that are not only correct but also efficiently generated, as the expert model’s input is only solicited when necessary.

The versatility of Co-LLM was tested using domain-specific datasets like BioASQ, which focuses on biomedical queries. This allowed the researchers to demonstrate how Co-LLM could better answer questions typically reserved for human experts, such as the mechanisms behind specific diseases. Instances have been documented where a general LLM would misstate essential information, for example, incorrectly naming ingredients of a prescription drug. However, leveraging the Co-LLM framework, the specialized biomedical model can step in to provide accurate and reliable information.

Additionally, the algorithm’s capability to handle complex mathematical problems was showcased when Co-LLM collaborated with a specialized math model called Llemma. The general-purpose model initially miscalculated the solution to a problem involving powers but, through the Co-LLM framework, settled on the correct answer through a partnership with the math expert model. This collaboration not only yielded a correct response but also resulted in a more efficient answer-generation process compared to traditional methods.

The potential impact of Co-LLM extends far beyond mere accuracy. Researchers are exploring how to leverage human self-correction methods to fine-tune the algorithm further. By incorporating a more robust deferral approach, Co-LLM could backtrack and make adjustments when the specialized model fails to yield an accurate response. This opportunity for corrective measures would enhance the model’s reliability, ensuring users receive satisfactory outputs.

Furthermore, there are aspirations to provide regular updates to the expert model without having to retrain it from scratch. By maintaining an up-to-date repository of knowledge, Co-LLM could assist in a wide range of applications, from enterprise document management to niche fields requiring the latest research insights.

With the promising attributes of Co-LLM, it is clear that its ability to enrich collaboration between various models could lead to significant strides in the field of natural language processing. As researchers continue to refine its mechanisms and explore its applications, Co-LLM may serve as a framework for future AI systems that are not only smarter but also far more efficient in delivering accurate information to users worldwide.