In today’s fast-paced world, consumers are often confronted with the task of evaluating food quality, particularly when it comes to picking fresh produce from grocery store displays. The visual appeal of food, especially fruits and vegetables, plays a crucial role in consumer choices. However, the question arises: is there a technological solution that can consistently aid in this process? Recent research from the Arkansas Agricultural Experiment Station proposes a novel intersection between human perception and machine learning that could revolutionize how we assess food quality.

Dongyi Wang, an assistant professor specializing in smart agriculture and food manufacturing, led a groundbreaking study that has recently been published in the Journal of Food Engineering. This research highlights the inadequacy of current machine-learning models in matching human beings’ subtle ability to evaluate food quality, especially in varying environmental conditions. Unlike human evaluators, machines have traditionally struggled to adapt to differing lighting situations, which can significantly influence perceptions of freshness and quality.

The study sheds light on a vital finding: when trained on data reflecting human judgment across different lighting conditions, machine-learning models can enhance their predictions of food quality. Wang points out that, “to establish the reliability of machine-learning models, an understanding of human reliability must precede it.” This approach not only incorporates human insights but also acknowledges the variabilities inherent in human perception.

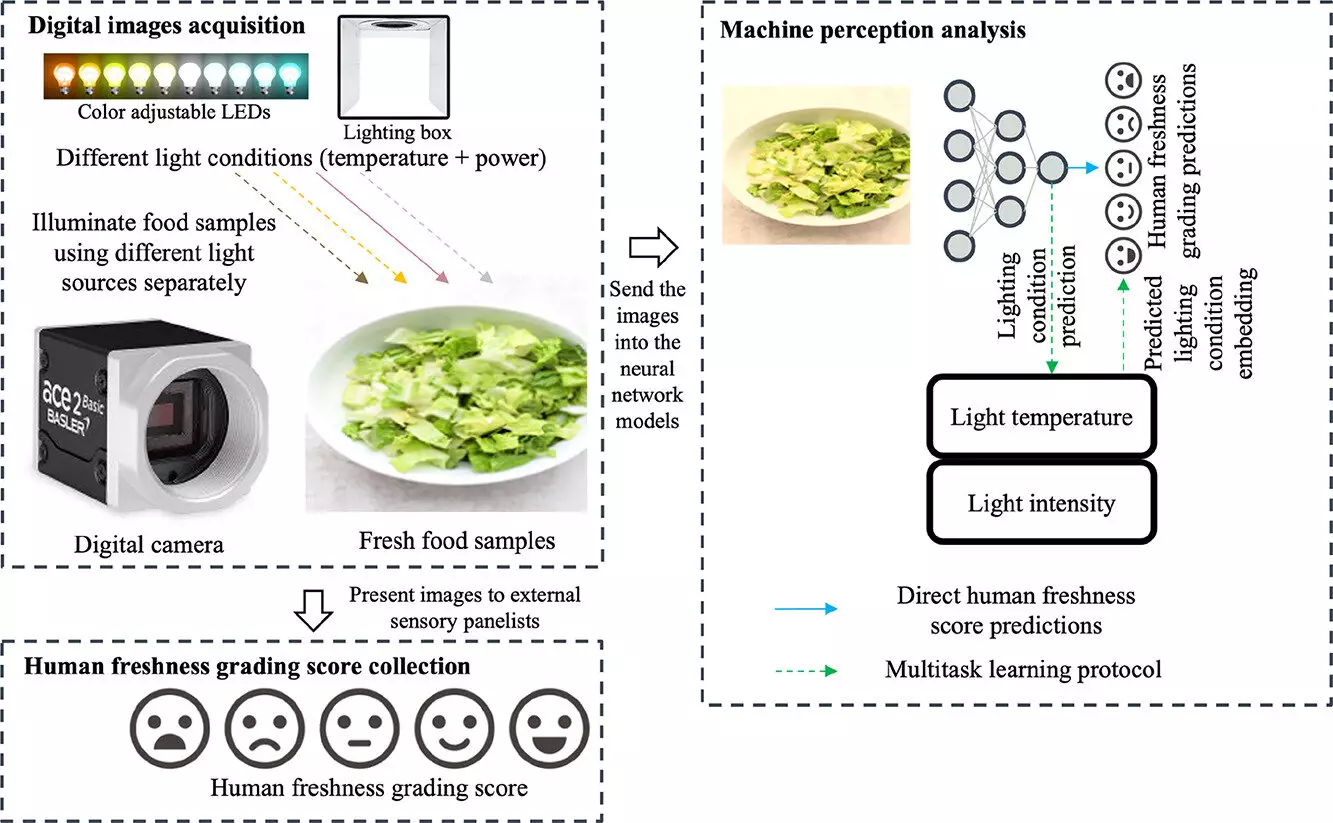

One of the study’s key revelations is the substantial impact of illumination on human perception of food quality. For instance, while warm lighting may mask browning in produce, cooler tones can expose quality degradation. By using lettuce samples, the researchers examined how different lighting variables influenced participants’ evaluations of product freshness. This study involved 109 participants, ensuring a broad demographic representation. Each participant engaged in multiple sensory sessions, assessing images under varied lighting conditions, ultimately creating a comprehensive dataset.

This dataset is crucial, paving the way for training machine-learning models properly by melding data from human perceptions with traditional computer vision algorithms. The stark comparison revealed a significant 20% reduction in prediction errors by integrating human observational data into the model.

The researchers utilized advanced machine learning techniques, applying neural networks to analyze the multitude of images graded by human evaluators. By closely mimicking human grading, these models can now provide a more consistent assessment of food quality. The integration of sensory science and machine learning appears promising, especially considering that the majority of existing computer vision systems often overlook variances in human perceptions due to differing environmental conditions.

As noted in the study, traditional models primarily rely on static human-labeled databases or simple color metrics without factoring in the variables that can distort perception, such as lighting. This oversight is significant; machine learning applications could benefit greatly from a more nuanced understanding of human visual experiences.

The implications of Wang’s study reach beyond the confines of food evaluation. The innovative methodology for training machine vision systems using human perception could extend into various industries—ranging from jewelry assessment to quality control in manufacturing. The same principles that regulate how we assess produce can similarly regulate how consumers evaluate other visual products. The study underscores the importance of interdisciplinary approaches, combining fields like sensory science, engineering, and computer science to forge new pathways for technological innovation.

The research spearheaded by Wang and his colleagues marks a significant stride toward enhancing food quality assessments through machine learning. With applications poised to extend into various areas, this study illustrates the profound potential of integrating human sensory insights into computational models.

As grocery stores and food processing facilities look to bolster their quality assurance systems, leveraging these findings could lead to a new era of food quality evaluation—one that not only improves the predictability of machine assessments but also aligns them more closely with human experiences. As technology continues to evolve, so too will the possibilities for creating systems that better reflect our nuanced perceptions of quality in the foods we consume.