In the ever-changing landscape of social media, platforms often strive to establish a balance between user expression and community guidelines. Threads, a relatively new entrant in the microblogging arena, has taken significant strides to enhance user experience by launching its Account Status feature. This tool empowers users to track their content’s status in a transparent manner, allowing them to understand better how their posts align with the platform’s community standards. Unlike traditional social media platforms that often operate in opaque systems, Threads is redefining accountability by offering its users deeper insight into the moderation process.

Understanding the Mechanism Behind Account Status

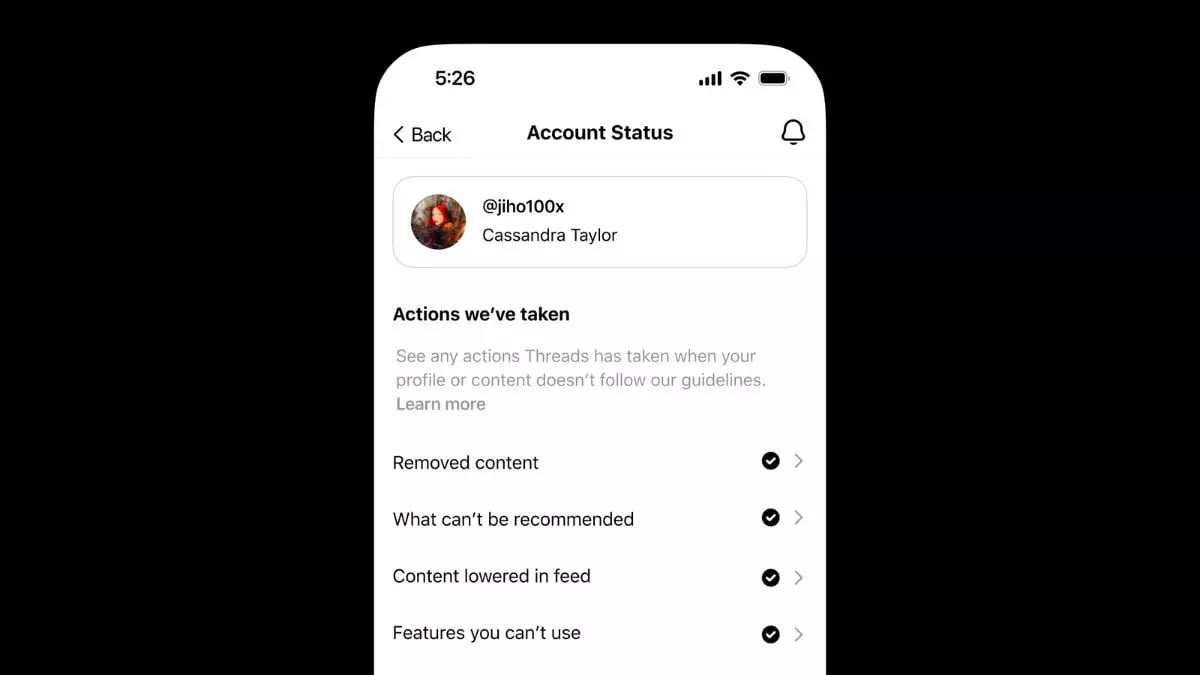

The essence of the Account Status feature lies in its ability to notify users about actions taken against their posts, including removal or demotion. Users can easily navigate to the settings menu to access this information, making it user-friendly and straightforward. By displaying four potential actions — removal, non-recommendation, lowered visibility, and feature restrictions — Threads ensures that users are fully informed about their posts’ performance and the reasons behind specific actions. This initiative not only holds the platform accountable but also arms users with the knowledge required to understand their standing within the community.

Advocating for User Rights and Fairness

Perhaps one of the most commendable aspects of Threads’ new feature is the ability to contest decisions through an appeals process. If users believe their content has been unjustly moderated, they can submit a review request. This promotes a democratic approach to content moderation, fostering a sense of community engagement where voices can be heard. Upon review, users receive notifications, ensuring that they remain informed and engaged with the outcome. This level of transparency is crucial in a time when users can often feel powerless against arbitrary moderation practices, particularly on larger social platforms.

The Role of Community Standards in Content Moderation

However, while the feature promotes openness, it does not come without its caveats. Threads applies strict community standards to content moderation, emphasizing the need for authenticity, dignity, and safety. While it’s important to uphold freedom of expression, the subjective nature of moderation raises questions about how “safety” is defined and enforced. As Threads tackles sensitive subjects — even involving AI-generated content — a careful balance must be maintained to allow for diverse viewpoints without compromising user safety. It’s a delicate task that requires an ongoing dialogue between the platform and its users.

User Empowerment Through Transparency

In essence, Threads’ Account Status feature represents a forward-thinking approach designed to elevate user experience while maintaining community integrity. By incorporating an appeals process and clearly outlining moderation actions, Threads has positioned itself as a leader in promoting transparency. This not only fosters user trust but also encourages more meaningful interactions within the app. While challenges persist in achieving perfect moderation, the commitment to user education and engagement is commendable. In a realm where users yearn for control over their digital presence, Threads appears poised to empower them like never before.