In the complex world of artificial intelligence, the scale of large language models (LLMs) has drawn significant attention. Companies like OpenAI, Meta, and Google have released models containing hundreds of billions of parameters—essentially the adjustable settings determining how these systems interpret and connect data. This massive parameterization leads to increased capability in recognizing patterns within data, which makes these models incredibly effective. However, the phenomenon of “bigger is better” in AI comes with its own set of challenges. The cost of training these colossal models can reach staggering heights; for example, Google’s development of its Gemini 1.0 Ultra cost approximately $191 million. The immense computational resources required for training and deploying such models also implicate a considerable environmental footprint. A striking statistic highlights this issue: a single inquiry made to the LLM ChatGPT consumes energy that is nearly tenfold that of a standard Google search.

The Shift Towards Smaller, More Efficient Models

Given the limitations associated with LLMs, researchers have begun to reconsider the potential benefits of smaller models, known as small language models (SLMs). This shift signals an intriguing evolution in the field. Companies like IBM, Google, and Microsoft have begun releasing SLMs characterized by parameters in the few billion range rather than the hundreds of billions. These smaller models operate effectively on specific tasks rather than as catch-all tools. For instance, SLMs can efficiently summarize text or answer questions as interactive chatbots, functioning hand-in-hand with smart devices and applications.

The concept of employing smaller models provides a compelling argument against the current obsession with scaling up every component of AI systems. A more focused approach can often yield results that are just as effective for particular tasks without the burdensome costs associated with their larger counterparts. Zico Kolter, a computer scientist at Carnegie Mellon University, succinctly remarks that “for a lot of tasks, an 8 billion-parameter model is actually pretty good.” This perspective is invaluable, as it highlights the untapped potential of simplicity in design within the realms of AI and machine learning.

Techniques Driving Efficiency

To enhance the effectiveness of these smaller models, researchers have honed various techniques that enable efficient training processes. The traditional method of training larger models often involves gathering vast amounts of uncurated data from the internet. This data can be messy and disorganized, vastly complicating the training process. However, small models can capitalize on a refined method called knowledge distillation, where a larger model effectively serves as a mentor or teacher to a smaller model, imparting valuable insights. By ensuring a high-quality training dataset, researchers can achieve impressive results faster and with less data.

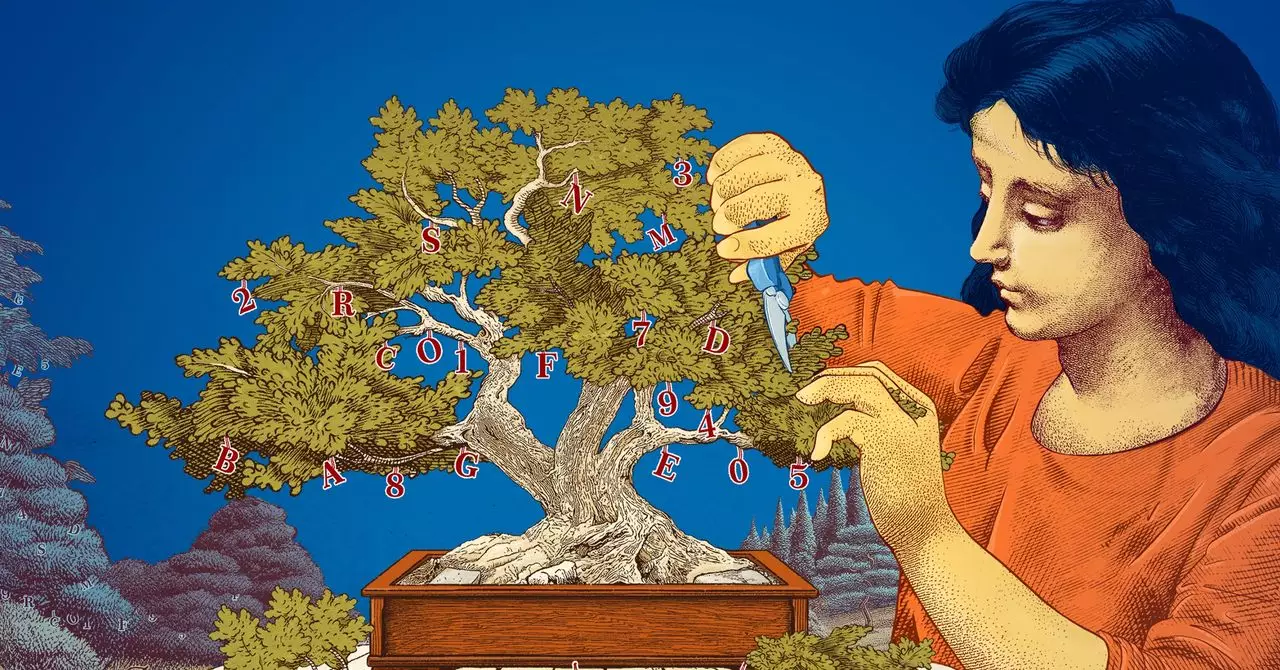

Pruning is another innovative approach currently being explored. Drawing inspiration from the efficiency of the human brain, pruning involves eliminating extraneous connections within neural networks—essentially streamlining the model’s structure. This practice is rooted in early suggestions from computer scientist Yann LeCun, who proposed that up to 90% of the parameters could be discarded without compromising function. This approach enables fine-tuning and specialization of small language models for specific applications, optimizing their performance while reducing computational requirements.

The Advantages of Small Models for Research and Application

The rise of small language models not only represents a shift in technical approach but also a new paradigm for researchers and practitioners in AI. Smaller models present an economical way to experiment with new ideas in a field that can often feel daunting due to the overwhelming complexity and costs associated with larger models. As expressed by Leshem Choshen from the MIT-IBM Watson AI Lab, SLMs allow for creative experimentation with “lower stakes,” thus fostering more innovation without the fear of overwhelming failure.

Moreover, due to their relatively simple architecture and reduced number of parameters, the decision-making processes of smaller models may be more interpretable. This transparency opens doors to understanding AI behavior, an increasingly important concern as AI systems become intricately woven into societal frameworks. While LLMs will undoubtedly continue to dominate tasks that require extensive generalization, the targeted efficiency provided by SLMs will become a staple in various applications, from healthcare chatbots to personalized smart devices.

In a rapidly evolving technological landscape, embracing the capabilities and benefits of smaller language models is not just a trend—it’s a necessary evolution that champions efficiency, affordability, and innovation.