In an era where privacy feels increasingly under siege, Signal—a messaging platform revered for its commitment to user confidentiality—has taken a definitive stand by launching a new security feature aimed at counteracting the nefarious implications of AI surveillance. The recent rollout of Microsoft’s Recall feature, which intricately monitors users by capturing their screen activities, pokes at the very core of user experience and raises significant privacy concerns. As technology proliferates, the line between helpful innovation and invasive monitoring becomes blurred, a trend that Signal has taken upon itself to challenge head-on.

Introducing Screen Security: A Necessity, Not a Luxury

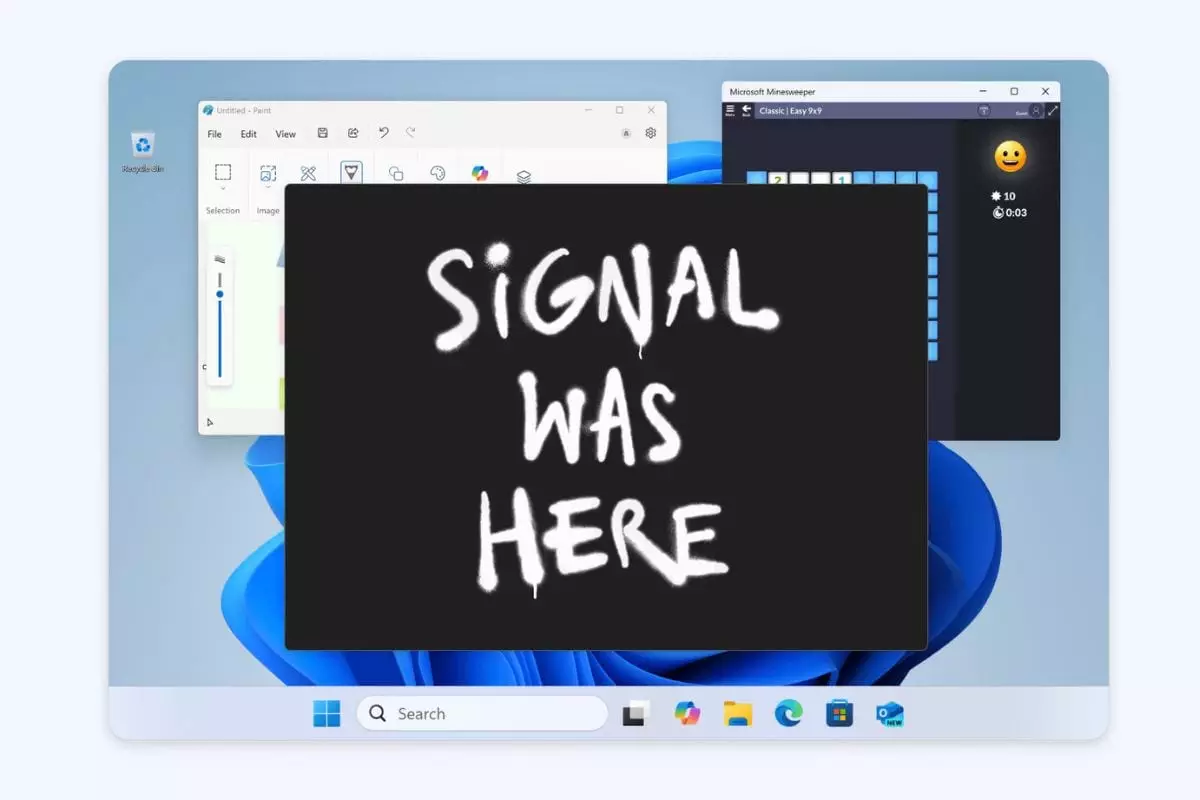

Signal’s response to this alarming feature from Microsoft comes in the form of its “Screen Security” initiative, which effectively prevents devices from taking screenshots of its application windows. This feature is not merely an enhancement; it’s a stark necessity given the limitations developers face in controlling how operating systems interact with apps. By default, Screen Security will be enabled for all Windows 11 users, reflecting a proactive stance that acknowledges the vulnerability of encrypted communications in the rapidly evolving digital landscape.

This move indicates a recognition that user privacy should never be something to negotiate in a terrain often dominated by corporate agendas. As the number of surveillance mechanisms increases, the need for robust protective measures becomes paramount. Users should feel secure, not scrutinized, when they engage in private conversations. Signal’s action not only exemplifies a protective measure but also embodies a philosophy that places user trust above all.

Microsoft’s Recall Feature: A Privacy Red Flag

Launched initially as a feature to help users navigate their digital activities, Microsoft’s Recall took a turn that many did not anticipate. Despite its purported benefits of easy access to digital histories, the backlash it faced from privacy advocates highlights a fundamental flaw in its approach: the absence of user-centric privacy controls. The data-hungry nature of the AI feature has opened a Pandora’s box of ethical dilemmas, challenging the very idea of personal agency in a digital ecosystem.

While Microsoft has since made efforts to adapt Recall to address some privacy concerns, critics argue that these adjustments do not adequately resolve the fundamental issue: users should be able to decide what data is collected, how it is stored, and for what purpose. Without substantial user control, tools like Recall risk fostering an environment where surveillance becomes normalized. This is where Signal’s new measure steps in—a necessary pushback against a narrative that renders individuals’ experiences as secondary to tech advancements.

The Balancing Act: Privacy vs. Accessibility

A telling aspect of Signal’s new feature is its acknowledgment of the potential accessibility issues that Screen Security might introduce. As the message of privacy becomes increasingly more complex, organizations must find ways to ensure that enhanced security does not inadvertently hamper usability, especially for individuals relying on assistive technologies.

Signal’s commitment to offering a toggle for Screen Security respects the diverse needs of its user base. It’s crucial, however, that as privacy initiatives evolve, they do not inadvertently disenfranchise users who depend on certain functionalities to access digital communications. By providing a warning when the feature is disabled, Signal takes an important step, underscoring the necessity of communicating the risks rather than leaving users in the dark.

Charting a Path Forward for Privacy-Centric Solutions

Signal’s recent initiative is a clarion call for developers and tech companies to reconsider their roles within the broader narrative of privacy. As the company asserts, it’s imperative that AI systems, like Microsoft’s Recall, approach design with a stringent focus on user implications. Users should not be Facebook’s product, but rather informed participants whose preferences shape their experiences. Companies like Signal demonstrate that proactive measures are essential in safeguarding user sanctity amidst the rush of technological advancements.

Thus, while the technological landscape continues to evolve, so too should our frameworks for privacy and user control. As Signal demonstrates, proactive and thoughtful responses can drive a fundamental shift in how user privacy is preserved, reinforcing the idea that your conversations are your own—an ideal worth fiercely protecting in a digital age poised for both remarkable advancement and potential encroachment.